- #Get file path filter to filter csv nifi how to

- #Get file path filter to filter csv nifi install

- #Get file path filter to filter csv nifi update

- #Get file path filter to filter csv nifi software

Write FlowFile data to Hadoop Distributed File System (HDFS).

#Get file path filter to filter csv nifi update

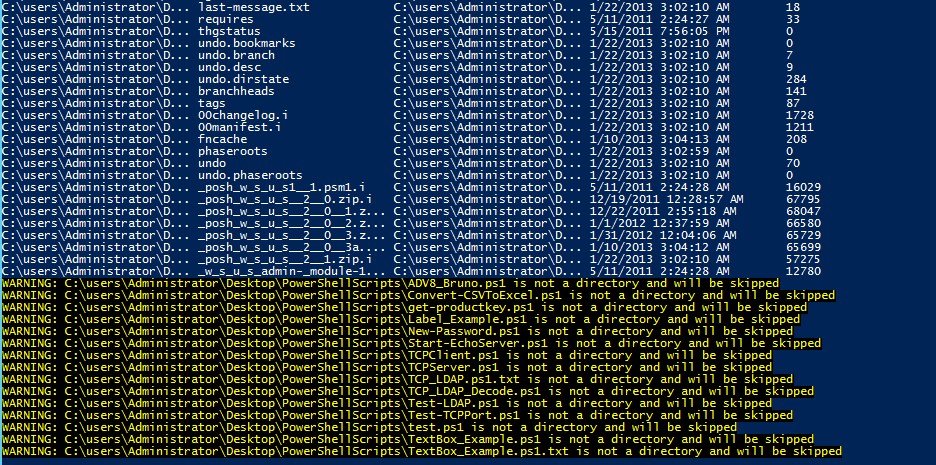

For that, we are using the UpdateAttribute to update the filename and giving the extension for FlowFile as below Updates the Attributes for a FlowFile by using the Attribute Expression Language and/or deletes the attributes based on a regular expressionĪfter merging a group of rows into a flow file, we need to give the name for the FlowFile differently. In the above, we need to specify the Delimiter Strategy as Text and In Demarcator value press shift button + Enter then click ok because we need to add every row in the new line. So we are merging the single row 1000 rows as a group as for that we need to configure as below : Merges a Group of FlowFiles based on a user-defined strategy and packages them into a single FlowFile. Updates the content of a FlowFile by evaluating a Regular Expression (regex) against it and replacing the section of the content that matches the Regular Expression with some alternate value.Īfter evaluating the required attributes and their values, we arrange them into column by column using ReplaceText as below. For that, we update a regular expression in the update attribute processor. Here we are updating some attributes values to uppercase. Updates the Attributes for a FlowFile using the Attribute Expression Language and/or deletes the attributes based on a regular expression. The output of the above-evaluated attributes In the above image, we are evaluating the required attribute naming attributes meaning full. The value of the property must be a valid JsonPath. To Evaluate the attribute values from JSON, JsonPaths are entered by adding user-defined properties the property's name maps to the Attribute Name into which the result will be placed (if the Destination is FlowFile-attribute otherwise, the property name is ignored). The output of the JSON data after splitting JSON object: Since we have a JSON array in the output of the JSON data, we need to split the JSON object to call the attributes easily, so we are splitting the JSON object into a single line object as above. If the specified JsonPath is not found or does not evaluate an array element, the original file is routed to 'failure,' and no files are generated. Each generated FlowFile is compressed of an element of the specified array and transferred to relationship 'split,' with the original file transferred to the 'original' relationship. Splits a JSON File into multiple, separate FlowFiles for an array element specified by a JsonPath expression. Here we are ingesting the json.txt file emp data from a local directory for that, we configured Input Directory and provided the file name.

NiFi will ignore files it doesn't have at least read permissions for, and Here we are getting the file from the local Directory.

#Get file path filter to filter csv nifi how to

#Get file path filter to filter csv nifi install

Install single-node Hadoop machine Click Here.Install Ubuntu in the virtual machine Click Here.In this recipe, we read data in JSON format and parse the data into CSV by providing a delimiter evaluating the attributes and converting the attributes to uppercase, and converting attributes into CSV data storing the Hadoop file system HDFS.ĭata Ingestion with SQL using Google Cloud Dataflow System requirements : It provides a web-based User Interface to create, monitor, and control data flows.

It is a robust and reliable system to process and distribute data.

#Get file path filter to filter csv nifi software

Recipe Objective: How to read data in JSON format, add attributes, convert it into CSV data, and write to HDFS using NiFi?Īpache NiFi is open-source software for automating and managing the data flow between systems in most big data scenarios.

0 kommentar(er)

0 kommentar(er)